27 November 2024

It’s been a little over a week since I announced my RunningAHEAD Analyzer. Yesterday, I did a couple pull requests to the repository. Since I now have the new Mac mini with the M4, I’m slowly moving some of my homelab activities to it. The first thing that I wanted was an ETL script that would persist my RunningAHEAD log and some analysis into a DuckDB database stored on the file system. I re-factored the script to remove the Jupyter notebook as I can now use a notebook for analysis and not for the ETL. In this post, I’ll describe some of the design decisions and talk about the first night of the ETL script running through a cron job on the Mac mini.

My primary goal was to run a process daily to download my log from the RunningAHEAD website. The Jupyter notebook that I was using was nice to run through some concepts but I deemed it overkill to use it for the act of downloading my log. I chose to refactor the code to a standard Python script. I called it runningahead-etl.py. ETL stands for Extract, Transform, and Load and is used in data engineering to take data from the source. Since the act of analysis is now independent to the data ingestion, the database would be persisted in a DuckDB database file on the machine. I chose to do a full load each time the script runs. There wasn’t enough data to warrant building any delta loading mechanism, so I can assume the log that is downloaded from RunningAHEAD will always be the full log and I’ll process it each day. I added logging so I can see what is happening throughout the script execution for later debugging.

I received my new Mac mini with the M4 processor this week. My MacBook Pro is my primary computer but since it is a laptop, it’s not on all the time. I take it with me when I go places. I wanted a machine that would always be on that will execute the ETL. I’ll write more about my adventures in building a full Homelab with the Mac mini, but its first use case is running my ETL. This meant I had to update the README.md file to configure the Mac mini to execute the script. At some point I’ll experiment with using Docker but one thing at a time. I did the setup and then added a crontab. It executed successfully last night, so I’m sitting in a good spot.

Since the last version, I am persisting some new tables in the database:

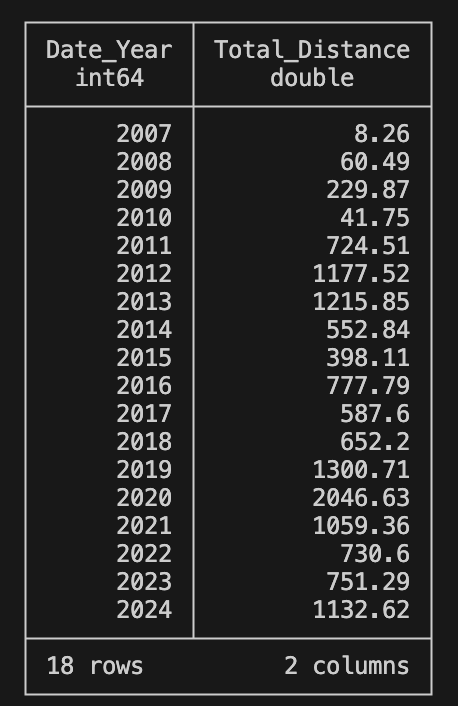

I included an analysis.py that can be executed to get the total streaks greater than a set number of days and the total miles by year:

The code should be self-explanatory, but over the rest of the post I’ll write the exact steps I took to get things working on the Mac mini.

Install Homebrew:

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"

Install pyenv

brew install pyenv

Install Python 3.13.0 (latest version as of this post)

pyenv install 3.13.0

Install Google Chrome (I normally use Safari, and the webdriver will issue warnings if it’s not present) and the the Chrome webdriver

brew install --cask chromedriver

Clone the runningahead-analyzer repository in the appropriate folder. I am in ~/Developer/

git clone https://github.com/rmalesevich/runningahead-analyzer.git

Configure the Python virtual environment

cd runningahead-analyzer

pyenv local 3.13.0

python3 -m venv venv

source venv/bin/activate

After that is done, there will be a python version found at runningahead-analyzer/venv/bin/python that can be used that will be the 3.13.0 version.

Install the requirements

pip install -r requirements.txt

Configure the .env file (refer to the README.md file)

Configure the crontab:

0 23 * * * /FULL PATH TO FOLDER/runningahead-analyzer/venv/bin/python /FULL PATH TO FOLDER/runningahead-analyzer/runningahead-etl.py

Since I’m running on MacOS, I had to approve that the script can have access to my Downloads folder. Once that was done, things flowed together quite nicely.